Difference between revisions of "2023W4:References"

(→PROJECTS) |

(→PROJECTS) |

||

| (21 intermediate revisions by 2 users not shown) | |||

| Line 27: | Line 27: | ||

=='''PROJECTS'''== | =='''PROJECTS'''== | ||

| − | CS (http://cs.roboticbuilding.eu/index.php/Shared:2019Final) and | + | CS (http://cs.roboticbuilding.eu/index.php/Shared:2019Final and http://cs.roboticbuilding.eu/index.php/2019MSc3) |

| − | (https://en.wikipedia.org/wiki/Bigelow_Expandable_Activity_Module) <br> | + | <br> |

| − | + | HB/ RB graduation projects (https://repository.tudelft.nl/islandora/object/uuid%3A25f7cc18-7400-4c65-a205-15530982d504, http://cs.roboticbuilding.eu/index.php/project02:P5), https://drive.google.com/drive/folders/18dBlk9IpK4tr-U6HJwMl2wcB52fAlb8J, and | |

| + | https://repository.tudelft.nl/islandora/object/uuid%3A0461dd93-d335-4e43-b194-a035805176d6 | ||

| + | <br> | ||

| + | Bigelow (https://en.wikipedia.org/wiki/Bigelow_Expandable_Activity_Module) | ||

| + | <br> | ||

<br> | <br> | ||

----- | ----- | ||

| Line 35: | Line 39: | ||

=='''READINGS'''== | =='''READINGS'''== | ||

| − | + | ||

| − | + | ||

| − | + | ||

Bier, H., Khademi, S., van Engelenburg, C. et al. Computer Vision and Human–Robot Collaboration Supported Design-to-Robotic-Assembly. Constr Robot (2022). https://doi.org/10.1007/s41693-022-00084-1 | Bier, H., Khademi, S., van Engelenburg, C. et al. Computer Vision and Human–Robot Collaboration Supported Design-to-Robotic-Assembly. Constr Robot (2022). https://doi.org/10.1007/s41693-022-00084-1 | ||

<br> | <br> | ||

| Line 46: | Line 48: | ||

Lee, S. and Bier, H., Aparatisation of/in Architecture, Spool CpA #2, 2019. https://doi.org/10.7480/spool.2019.1.3894 | Lee, S. and Bier, H., Aparatisation of/in Architecture, Spool CpA #2, 2019. https://doi.org/10.7480/spool.2019.1.3894 | ||

<br> | <br> | ||

| + | Bier, H. Robotic Building, Adaptive Environments Springer Book Series, Springer 2018 (https://www.springer.com/gp/book/9783319708652) | ||

| + | <br> | ||

<br> | <br> | ||

Hoekman, W. [http://cs.roboticbuilding.eu/images/7/7d/Regolith_as_future_habitat_construction_material_-_Willem_Hoekman.pdf Regolith as future habitat construction material] and bio chitinous manufacturing: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0238606 | Hoekman, W. [http://cs.roboticbuilding.eu/images/7/7d/Regolith_as_future_habitat_construction_material_-_Willem_Hoekman.pdf Regolith as future habitat construction material] and bio chitinous manufacturing: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0238606 | ||

<br> | <br> | ||

| + | <br> | ||

| + | Literature on CV algorithms that employ structural reasoning by mapping a raster image - either noisy (first fig.) or floor plan drawing (second fig.) - into corresponding geometrical representation. This is equivalent to the problem addressed in the course, i.e., learning to map between an image of the Voronoi-like structure (in which the lines are not explicitly defined) to its geometrical counterpart (in which lines are surfaces are explicitly defined). This geometrical representation can subsequently be leveraged for the task at hand: | ||

| + | <br> | ||

| + | https://openaccess.thecvf.com/content_iccv_2017/html/Liu_Raster-To-Vector_Revisiting_Floorplan_ICCV_2017_paper.html | ||

| + | <br> | ||

| + | https://openaccess.thecvf.com/content/CVPR2022/html/Chen_HEAT_Holistic_Edge_Attention_Transformer_for_Structured_Reconstruction_CVPR_2022_paper.html | ||

| + | <br> | ||

| + | https://openaccess.thecvf.com/content/ICCV2021/html/Stekovic_MonteFloor_Extending_MCTS_for_Reconstructing_Accurate_Large-Scale_Floor_Plans_ICCV_2021_paper.html | ||

| + | <br> | ||

<br> | <br> | ||

----- | ----- | ||

| Line 58: | Line 71: | ||

<br> | <br> | ||

----- | ----- | ||

| + | |||

| + | =='''TEMPLATES'''== | ||

| + | |||

| + | Report: | ||

| + | <br>https://docs.google.com/document/d/1fNNps7UfgIfoOH8G0ar-5Bzz26-up8Y4/edit | ||

| + | <br><br> | ||

| + | Premiere project (9GB): | ||

| + | <br>https://drive.google.com/file/d/1fW5gJpLXPioM9SZoCJAiNOr817AWGZCu/view | ||

| + | <br><br> | ||

=='''EXAMPLES'''== | =='''EXAMPLES'''== | ||

| + | Conceptual and material systems: | ||

| + | <br> | ||

| + | https://docs.google.com/document/d/1HuC4LMdFcbEiV-xcFDMZns1TAx9q0Gx2T3S5jMg7Wjs/edit?usp=sharing | ||

| + | <br> | ||

| + | <br> | ||

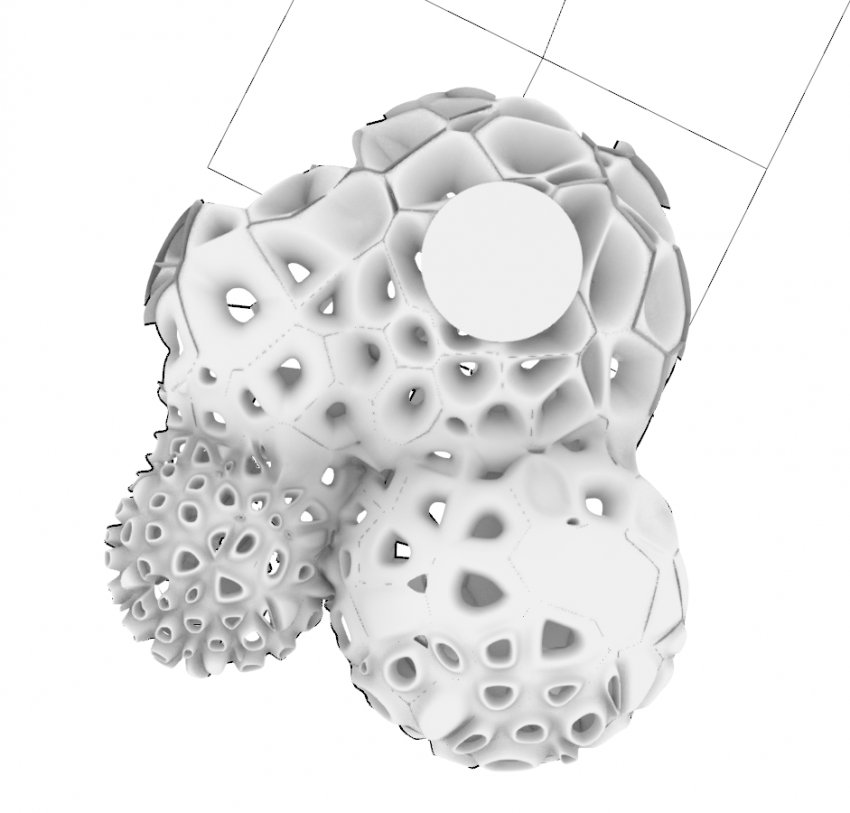

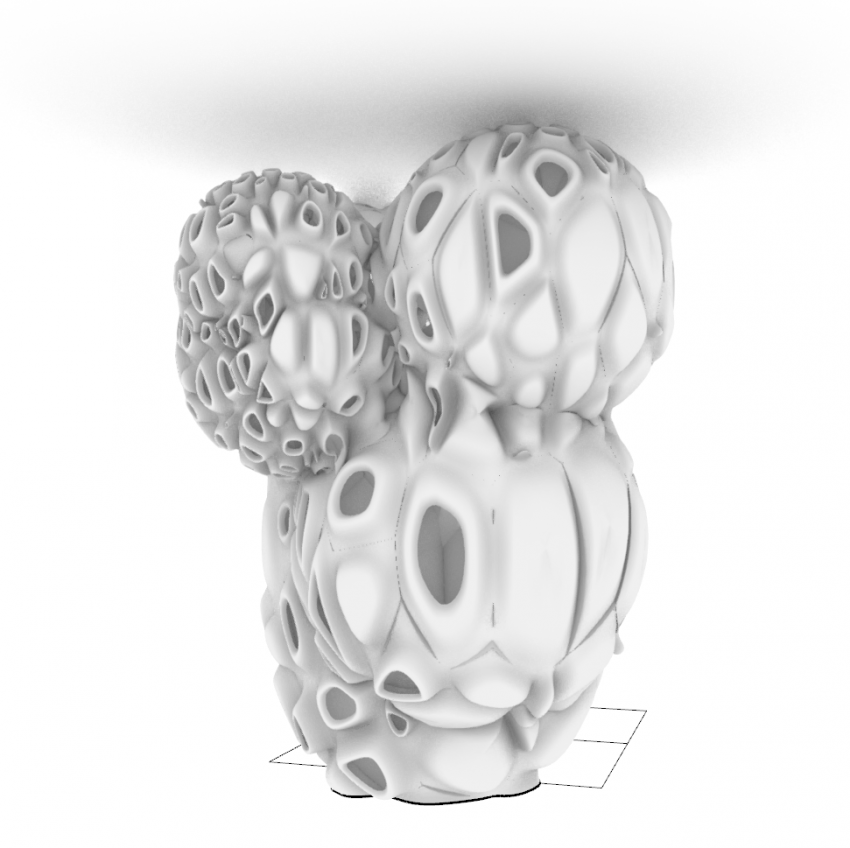

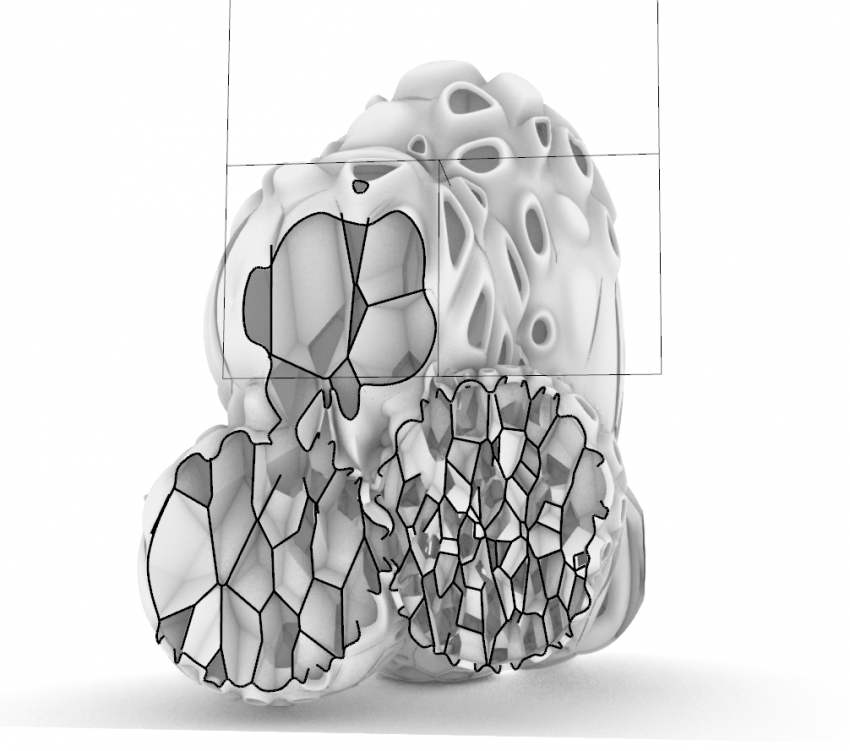

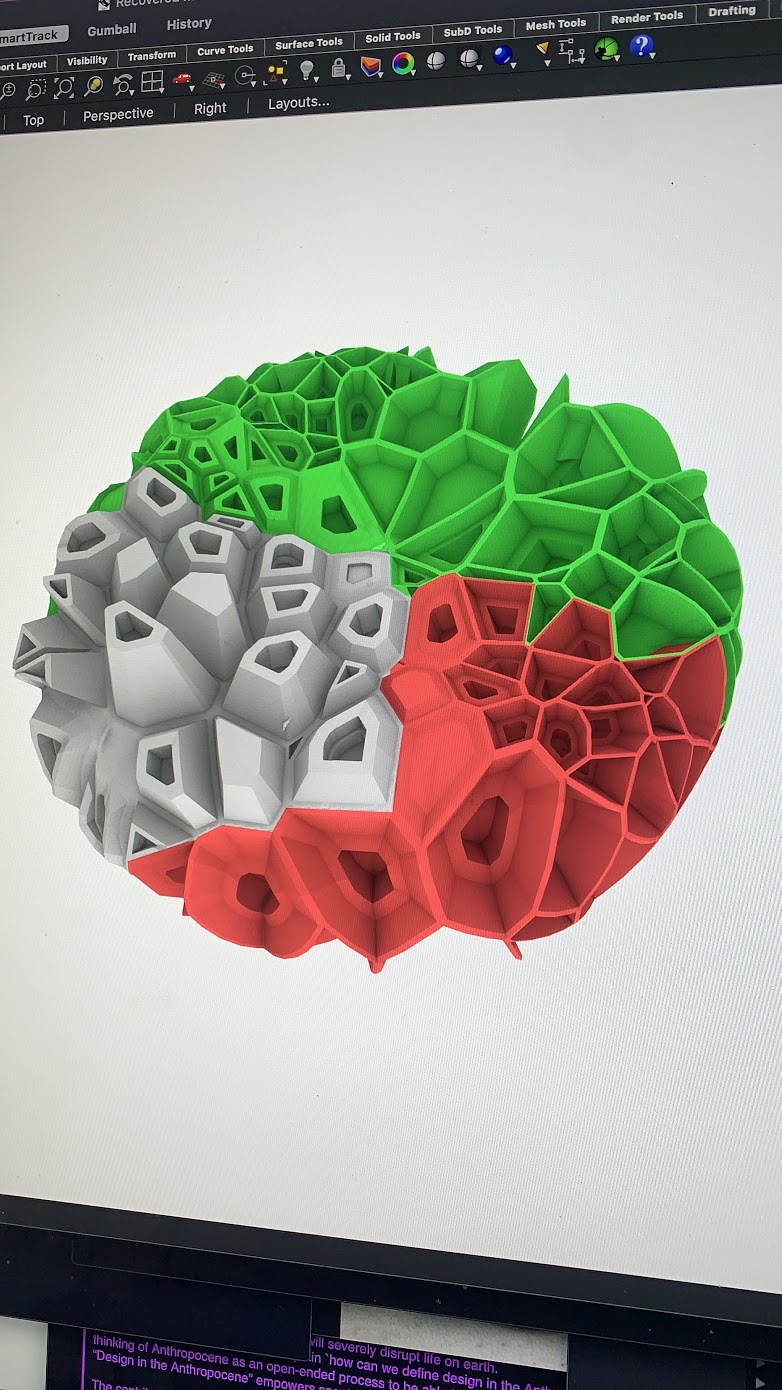

Scalable porosity and componential logic: | Scalable porosity and componential logic: | ||

<br> | <br> | ||

Latest revision as of 07:53, 14 April 2023

MSc 2 IAP 2023: Rhizome 2.0

PROJECTS

CS (http://cs.roboticbuilding.eu/index.php/Shared:2019Final and http://cs.roboticbuilding.eu/index.php/2019MSc3)

HB/ RB graduation projects (https://repository.tudelft.nl/islandora/object/uuid%3A25f7cc18-7400-4c65-a205-15530982d504, http://cs.roboticbuilding.eu/index.php/project02:P5), https://drive.google.com/drive/folders/18dBlk9IpK4tr-U6HJwMl2wcB52fAlb8J, and

https://repository.tudelft.nl/islandora/object/uuid%3A0461dd93-d335-4e43-b194-a035805176d6

Bigelow (https://en.wikipedia.org/wiki/Bigelow_Expandable_Activity_Module)

READINGS

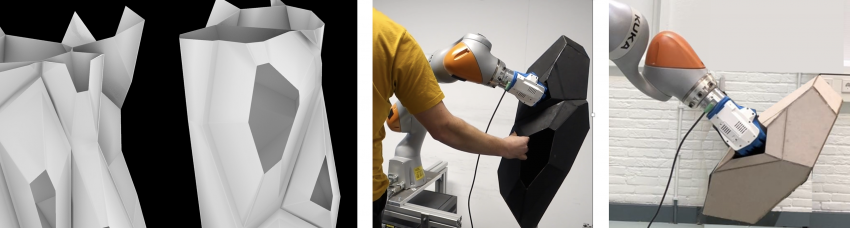

Bier, H., Khademi, S., van Engelenburg, C. et al. Computer Vision and Human–Robot Collaboration Supported Design-to-Robotic-Assembly. Constr Robot (2022). https://doi.org/10.1007/s41693-022-00084-1

Bier, H., Cervone, A., and Makaya, A. Advancements in Designing, Producing, and Operating Off-Earth Infrastructure, Spool CpA #4, 2021. https://doi.org/10.7480/spool.2021.2.6056

Pillan, M., Bier, H., Green, K. et al. Actuated and Performative Architecture: Emerging Forms of Human-Machine Interaction, Spool CpA #3, 2020. https://doi.org/10.7480/spool.2020.3.5487

Lee, S. and Bier, H., Aparatisation of/in Architecture, Spool CpA #2, 2019. https://doi.org/10.7480/spool.2019.1.3894

Bier, H. Robotic Building, Adaptive Environments Springer Book Series, Springer 2018 (https://www.springer.com/gp/book/9783319708652)

Hoekman, W. Regolith as future habitat construction material and bio chitinous manufacturing: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0238606

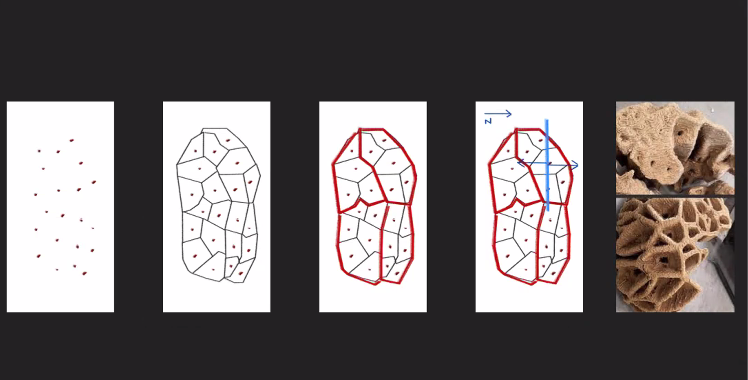

Literature on CV algorithms that employ structural reasoning by mapping a raster image - either noisy (first fig.) or floor plan drawing (second fig.) - into corresponding geometrical representation. This is equivalent to the problem addressed in the course, i.e., learning to map between an image of the Voronoi-like structure (in which the lines are not explicitly defined) to its geometrical counterpart (in which lines are surfaces are explicitly defined). This geometrical representation can subsequently be leveraged for the task at hand:

https://openaccess.thecvf.com/content_iccv_2017/html/Liu_Raster-To-Vector_Revisiting_Floorplan_ICCV_2017_paper.html

https://openaccess.thecvf.com/content/CVPR2022/html/Chen_HEAT_Holistic_Edge_Attention_Transformer_for_Structured_Reconstruction_CVPR_2022_paper.html

https://openaccess.thecvf.com/content/ICCV2021/html/Stekovic_MonteFloor_Extending_MCTS_for_Reconstructing_Accurate_Large-Scale_Floor_Plans_ICCV_2021_paper.html

PRESENTATIONS

Voronoi-based D2RP

TEMPLATES

Report:

https://docs.google.com/document/d/1fNNps7UfgIfoOH8G0ar-5Bzz26-up8Y4/edit

Premiere project (9GB):

https://drive.google.com/file/d/1fW5gJpLXPioM9SZoCJAiNOr817AWGZCu/view

EXAMPLES

Conceptual and material systems:

https://docs.google.com/document/d/1HuC4LMdFcbEiV-xcFDMZns1TAx9q0Gx2T3S5jMg7Wjs/edit?usp=sharing

Scalable porosity and componential logic: