Difference between revisions of "Shared:H8A2"

| Line 45: | Line 45: | ||

---- | ---- | ||

| − | |||

=='''The idea'''== | =='''The idea'''== | ||

| Line 53: | Line 52: | ||

What if we could move objects just by pointing at them? | What if we could move objects just by pointing at them? | ||

| − | |||

=='''How we did it'''== | =='''How we did it'''== | ||

| Line 60: | Line 58: | ||

The wearable is able to recognize arm and hand gestures as well as their relative position in an intelligent environment. These abilities combined with some processing make it possible to send commands to an actuator in a smart environment just by pointing to the desired object. | The wearable is able to recognize arm and hand gestures as well as their relative position in an intelligent environment. These abilities combined with some processing make it possible to send commands to an actuator in a smart environment just by pointing to the desired object. | ||

| + | |||

| + | [[File:SSdiagram.jpg | 450px]] | ||

=='''The Wearable'''== | =='''The Wearable'''== | ||

<youtube>Ei8LQ3Nb_94</youtube> | <youtube>Ei8LQ3Nb_94</youtube> | ||

| − | |||

Source code: | Source code: | ||

https://github.com/oiacflu/ssleeve | https://github.com/oiacflu/ssleeve | ||

Revision as of 01:25, 12 June 2018

H8A2 - Smart Sleeve

Authors

- Pedro Santos

- Caio Santos

Tutors

- Henriette Bier (Associate Professor Robotic Building TUD)

- Alex Liu Cheng (PhD student Robotic Building TUD)

Colaborators

- Marcela Sabino (Director of Museum of Tomorrow Laboratory)

- Ricardo Weissenberg (Creative Maker of Museum of Tomorrow Laboratory)

- Eduardo Migueles (Creative Maker of Museum of Tomorrow Laboratory)

The idea

The Smart Sleeve is a proof of concept of a wearable capable of interacting with a smart environment. The project was developed during a 3 days workshop in the 8th edition of Hiperorgânicos - International Symposium on Research in Art, Hybridization, Biotelematics and Transculturalism that took place between the 22nd - 27th of May 2018 on Museu do Amanhã, Rio de Janeiro - Brazil.

Henriette Bier and Alexander Liu Cheng were aiming at this workshop to introduce students to Operation Design-to-Robotic (D2RPO) to achieve physical and sensory reconfiguration.

What if we could move objects just by pointing at them?

How we did it

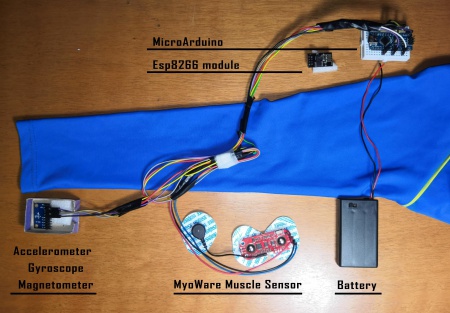

Smart Sleeve is composed by an accelerometer, a gyroscope a magnetic compass and a myoware muscle sensor. The input of its data is read by an atmega 328 and sent by a 433Mhtz RF transmitter to a receiver connected to a raspberry, where all data is ran into Processing. Where it generates a virtualization of a smart environment.

The wearable is able to recognize arm and hand gestures as well as their relative position in an intelligent environment. These abilities combined with some processing make it possible to send commands to an actuator in a smart environment just by pointing to the desired object.

The Wearable

Source code: